Dive Brief:

- Nvidia is planning a new generation of AI-optimized chip technologies just two months after unveiling its Blackwell GPU family, the company said last week during its Q1 2025 earnings call.

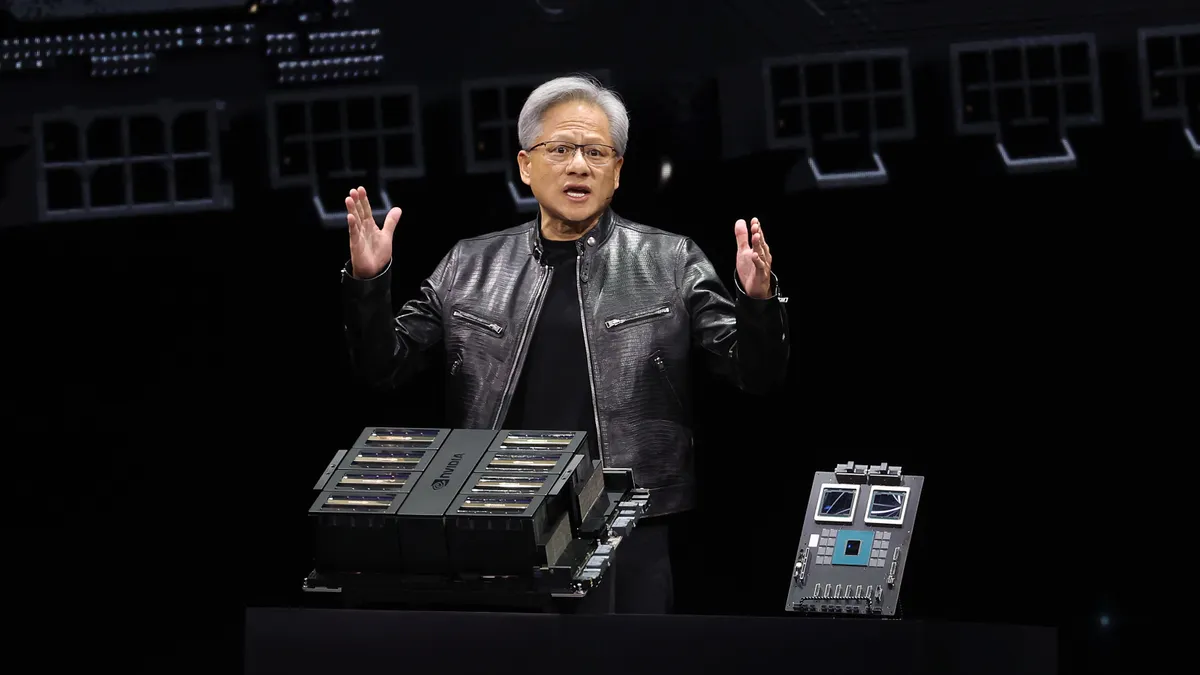

- “I can announce that after Blackwell, there's another chip, and we are on a one-year rhythm,” Nvidia President and CEO Jensen Huang said. “You're going to see… new CPUs, new GPUs, new networking NICs, new switches [and] a mound of chips that are coming.”

- The chipmaker’s revenues skyrocketed to $26 billion in the first quarter of its fiscal year, up 262% year over year. Hyperscaler demand helped drive data center revenue, Nvidia’s largest reported segment, to $22.6 billion on the quarter, up 427% compared to a year ago. “Large cloud providers continue to drive strong growth as they deploy and ramp Nvidia AI infrastructure at scale,” EVP and CFO Colette Kress said.

Dive Insight:

Nvidia’s fortunes rose steeply in the past year, buoyed by surging demand for AI processing power. As a key supplier of graphics processing units, the company occupies a pivotal position in the generative AI supply chain.

“The demand for GPUs in all the data centers is incredible — we're racing every single day,” Huang said. “The CSPs are consuming every GPU that's out there.”

As the company ramps up Blackwell production, Huang said there will be short-term supply constraints on the previous generation of chips.

“We expect demand to outstrip supply for some time as we now transition to H200,” Huang said.

Most enterprises aren’t directly impacted by manufacturing shortfalls. But GPU consumption patterns tie back to enterprise customers along several major routes, through model builders, cloud service providers and device manufacturers. Nvidia hardware is embedded in every leg of that journey.

Constructing large language models from the ground up devours massive amounts of compute resources for training and data. Kress reported that the first H200 Blackwell system was hand delivered to OpenAI CEO Sam Altman by Huang to power the GPT-4o demos earlier this month.

“Our production shipments will start in Q2, ramp in Q3 and customers should have data centers stood up in Q4,” Kress said.

While the three largest cloud providers — AWS, Microsoft and Google Cloud — have each developed their own proprietary AI chip technologies, they are also jostling for access to the latest Nvidia products.

“We’re in every cloud,” Huang said.

Hyperscaler business represents roughly 40% of Nvidia’s data center revenue, according to Kress.

GPU deployment is gaining additional momentum as enterprises cycle into device refresh mode.

Nvidia expanded its partnership with Microsoft around AI PCs Tuesday. A new line of Copilot+ PCs optimized for LLM tools will be outfitted with Nvidia and AMD graphics cards, Microsoft said in an announcement.

Dell, HP, Lenovo and Samsung have also entered the race to embed GPU capabilities in workstations.

“The PC computing stack is going to get revolutionized,” Huang said.